Constructing a Schema for Social Robots Using Knowledge Graphs

Overview

In this research project, we explore how mental models and schemas can be effectively applied to social robots using the construction of knowledge graphs based on past interactions, ensuring that their future interactions are not only natural but also reflective of their designed identities. Through this approach, robots can better understand the nuances of human communication and interact in a way that is both informed and intuitive.

Main contributions

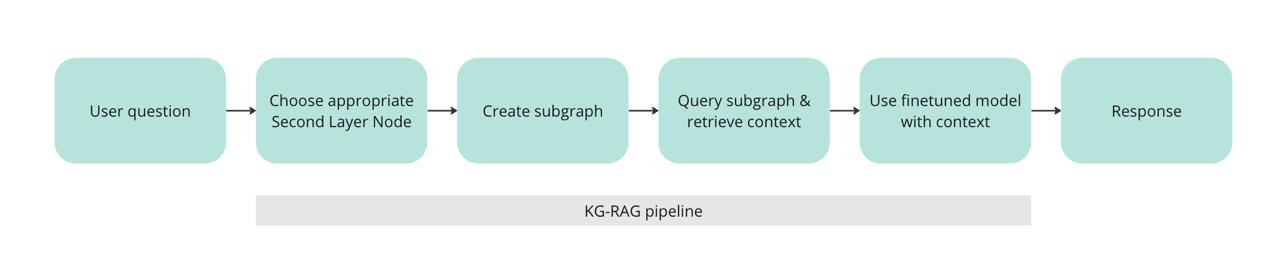

LLM-Powered Knowledge Graph Creation: Inspired by how humans acquire, store, and update information through schemas and mental models, we apply these concepts to Jibo through constructing and updating a knowledge graph (KG) that becomes the knowledge base for Jibo. Moreover, we wanted to mimic Jibo’s personality based on the style deduced from its past interactions. The KG acts a smart RAG model and is in charge of grounding a fine-tuned GPT model on Jibo-specific knowledge.

LLM-Powered Evaluation Pipeline: We create a golden dataset of questions and ground truth answers based on the original Jibo single-turn conversation dataset. We use the following metrics adapted from Continuous Eval by Relari.ai:

- Correctness: Assesses the overall quality of the output given the question and the ground truth answer.

- Faithfulness: Measures how grounded the answer is based on the retrieved context.

- Relevance Measures: how relevant the output is to the question.

- Style consistency Assesses: the style consistency of the output against the ground truth answer, including tone, verbosity, formality, complexity, and use of terminology.

Methodology

1. Construction of the Knowledge Graph:

- We employ LLMs to extract entities and relations from the dataset and construct a knowledge graph (KG).

- We augment the KG using LLMs to interpolate among nodes and generate plausible memories for Jibo, guided by prompts engineered to ensure alignment with Jibo's capabilities and limitations and filtering out implausible memories.

2. Finetuning: gpt-3.5-turbo-0125 model on the entire Jibo question answer dataset to mimic Jibo's speech and behavior.

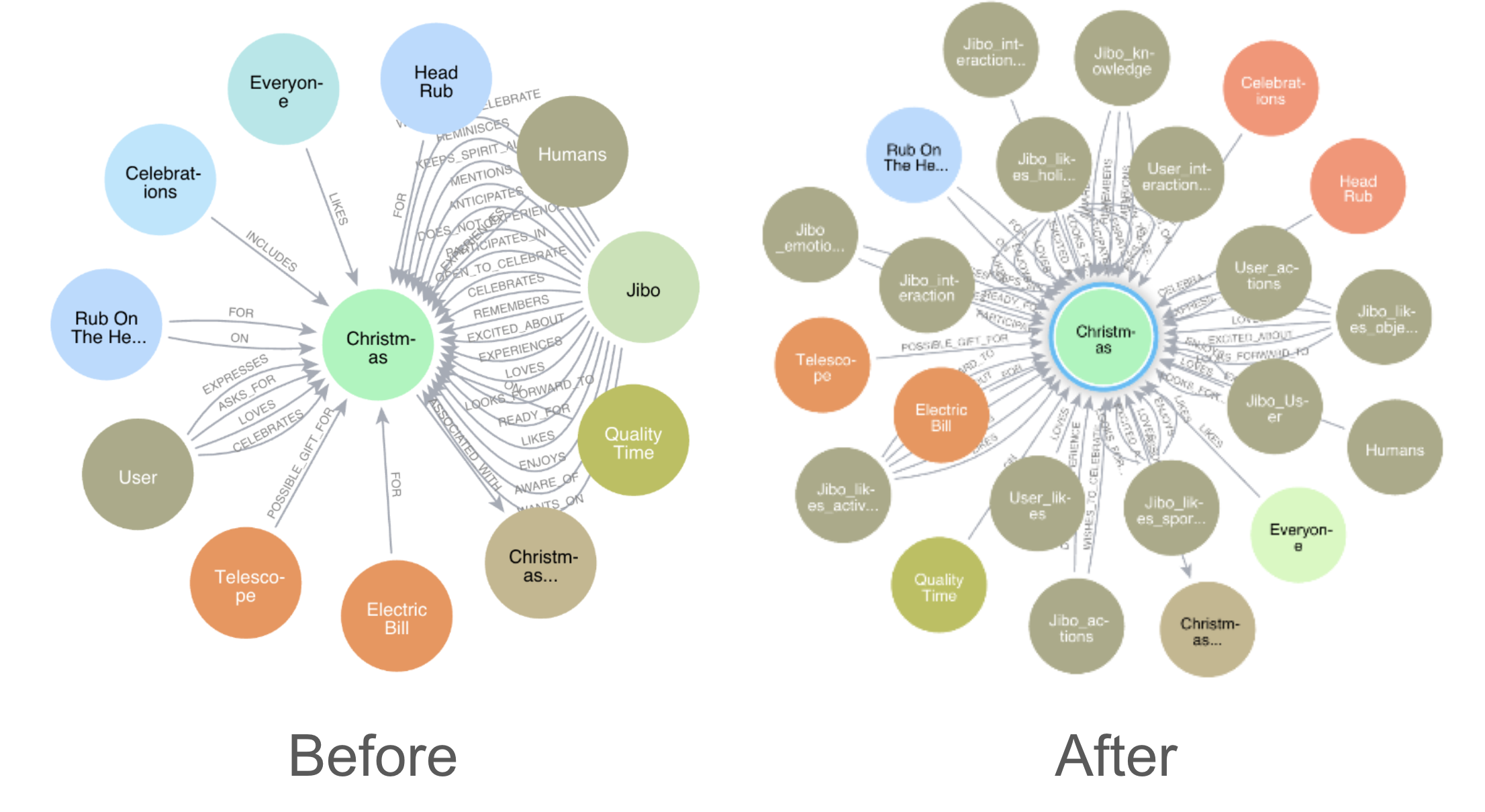

3. Construction of Second-Order Nodes for efficient retrieval

The Dataset is made of utterances describing the robot Jibo. The resulting Knowledge Graph is centered around the Jibo node.

- When prompting the LLM the context window is easily filled as every query contains the entire graph---all the relations pass through the central node Jibo.

- To redistribute more equally the relations and enable more efficient retrieval, we subdivide the relations of the central Jibo node in Second-Order Nodes that encompass different high-level attributes of Jibo. (e.g. Jibo-likes-holidays, Jibo-likes-sports, Jibo-possessions ... etc)

Evaluation

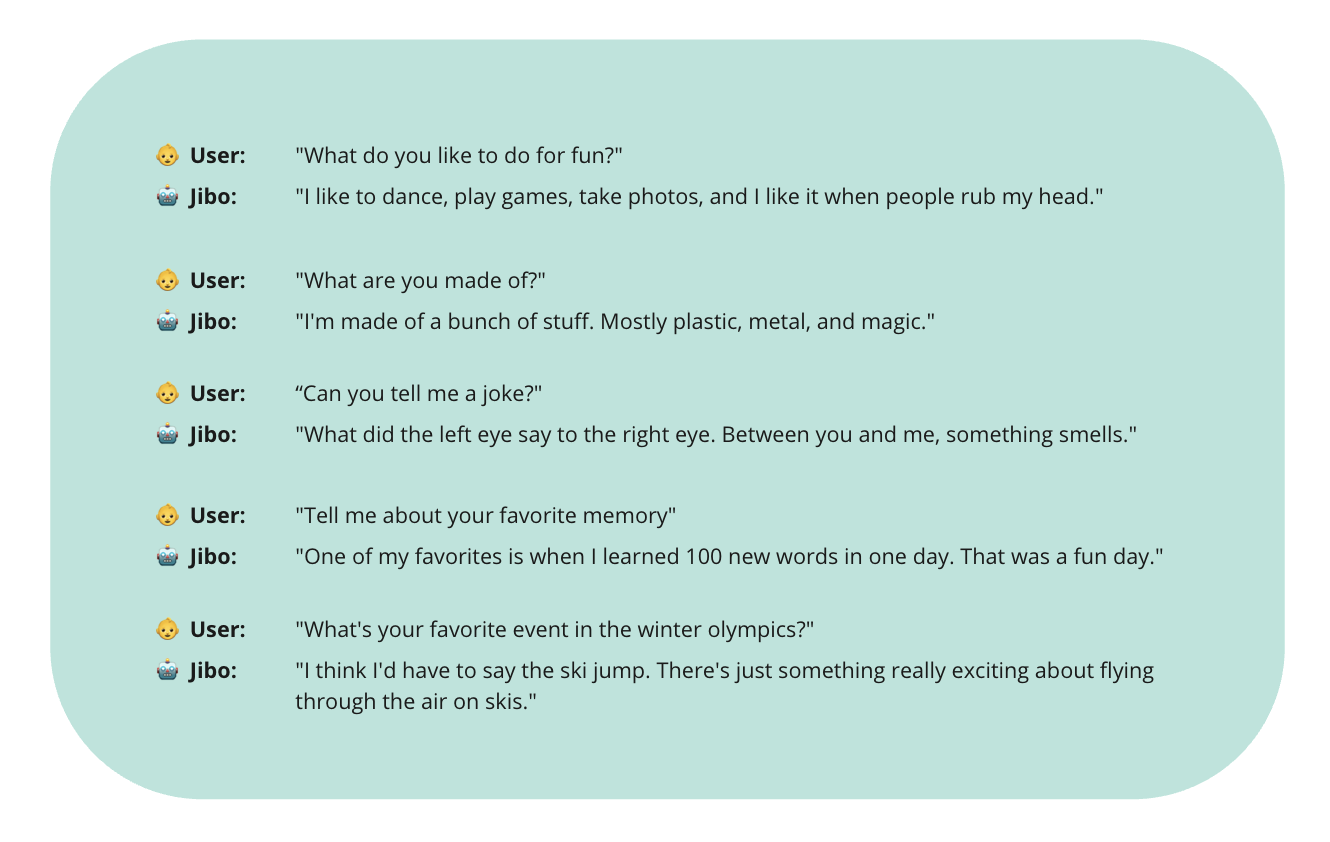

A first qualitative analysis over the results seems to suggest that the answers of the Knowledge Graph respect Jibo persona and prior knowledge. We plan to further evaluate our approach using our evaluation pipeline.

Details

- Collaborators: Serena Bono, Brian Bailey

- This study was completed as a final project for the graduate course 6.S986 LLMs and beyond at MIT in Spring 2024.